The call drop event total for the number 8119060142 raises critical questions about the underlying issues in the telecommunications network. Factors such as network congestion and insufficient signal strength may play significant roles. These call drops not only frustrate users but also threaten the credibility of the service provider. Understanding how to mitigate these problems is vital for enhancing user satisfaction and trust in the service. What measures can be implemented to tackle this persistent issue?

Overview of Call Drop Events

Call drop events represent a significant concern in telecommunications, impacting both service providers and users alike.

These incidents directly affect call quality, leading to frustration and decreased user satisfaction. Furthermore, they highlight issues related to network reliability, essential for seamless communication.

As the demand for uninterrupted service grows, understanding and addressing call drop events becomes crucial for enhancing user experience and maintaining operational efficiency.

Factors Contributing to Call Drops

Numerous factors contribute to the occurrence of call drops, each playing a critical role in network performance.

Network congestion often leads to inadequate bandwidth, causing interruptions in communication.

Additionally, poor signal strength due to physical barriers or distance from cell towers can severely impact call quality.

These elements collectively undermine the reliability of mobile networks, resulting in frustrating user experiences.

Impact on User Experience

The reliability of mobile networks directly affects user experience, particularly in light of the factors contributing to call drops.

Frequent interruptions lead to significant user frustration, undermining trust in service reliability. As users increasingly depend on seamless communication, any disruption can result in dissatisfaction, impacting overall perception of the service provider.

Thus, understanding call drop events is essential to enhance user satisfaction and loyalty.

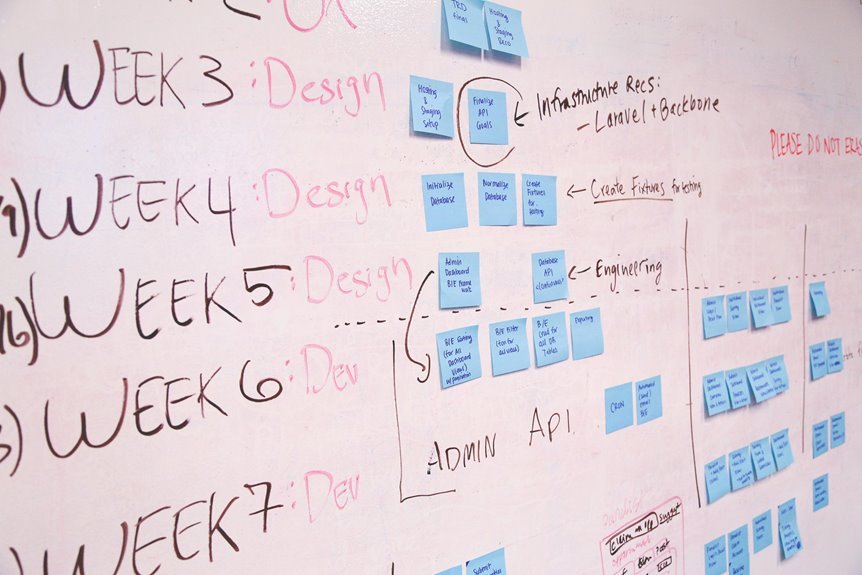

Solutions to Reduce Call Drop Events

While addressing the issue of call drop events is crucial for maintaining user satisfaction, implementing effective solutions requires a multifaceted approach.

Preventive measures, such as regular maintenance and equipment upgrades, are essential. Additionally, network optimization through advanced algorithms can enhance signal strength and coverage.

Conclusion

In the realm of telecommunications, the call drop event total for 8119060142 symbolizes a fracture in the bridge of communication, where users are left stranded in silence. This disconnection serves as a reminder of the fragility of trust between service providers and consumers. By addressing the underlying issues contributing to these drops, providers can mend this bridge, fostering stronger connections and enhancing user satisfaction, ultimately reinforcing their commitment to delivering reliable and efficient communication.